Large Memory Teramem

Hardware | |

Model | Lenovo ThinkSystem SR850 V2 |

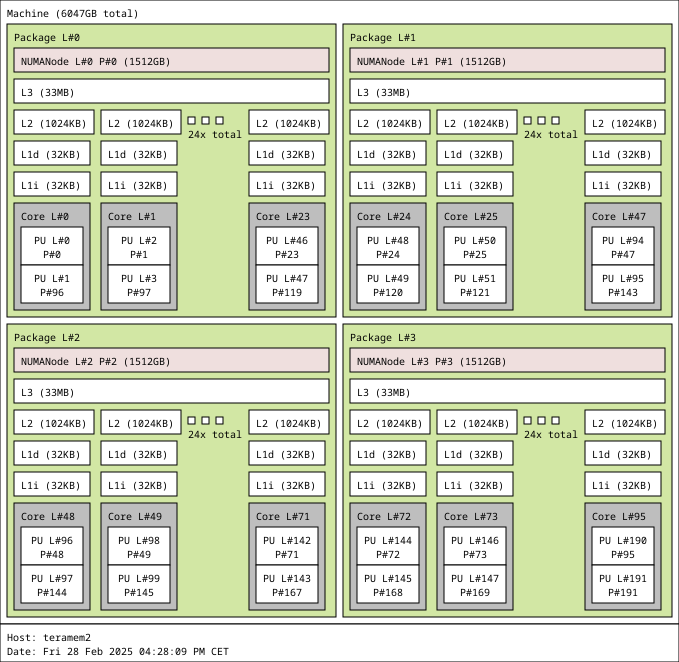

Processors | 4 x Intel Xeon Platinum 8360HL |

Number of nodes | 1 |

Cores per node | 96 |

Hyperthreads per core | 2 |

Core nominal frequency | 3.0 GHz (Range 0.8-4.2 GHz) |

Effective per-core memory | 64 GB |

| Memory per Node | 6,144 GB DDR4 |

Software (OS and development environment) | |

Operating system | SLES15 SP4 Linux |

MPI | Intel MPI, alternatively OpenMPI |

Compilers | Intel OneAPI |

Performance libraries | MKL, TBB, IPP |

Tools for performance and correctness analysis | Intel Cluster Tools |

Teramem System for Applications with Extreme Memory Requirements

The node teramem2 is a single node with 6 TBytes main memory. It is part of the normal Linux Cluster infrastructure at LRZ which means that users can access their $HOME and $PROJECT directories as on every other node in the cluster. However, its mode of operation slightly differs from the remaining cluster nodes which can only be used in batch mode. As the teramem2 is the only system at LRZ, which can currently satisfy memory requirements beyond 1 TByte in a single node, users can choose between using the system in batch or interactive mode depending on their specific needs. Both options are described below.

Further Reading

Please consult Running large-memory jobs on the Linux Cluster to learn more about running interactive or batch jobs on the Teramem.