GCS large scale project on SuperMUC-NG

Gauss Call 2024-1 (Call 31) for Large Scale Projects

Deadline

Twice per year, the Gauss Centre for Supercomputing GCS issues a Call for Large-Scale Projects, usually at the end of winter and at the end of summer.

The Gauss Call 2024-1 (Call 31) for Large Scale Projects is open

January 15th to February14th 2024, 17:00 o’clock CET (strict deadline).

The call covers the period May 1, 2024, to April 30, 2025.

Projects are classified as “Large-Scale” if they require >= 45 million core-hours on SuperMUC-NG.

Eligible are applications from German universities and publicly funded German research institutions, e.g., Max-Planck Society, and Helmholtz Association. Researchers from outside Germany may apply through PRACE (https://prace-ri.eu/).

Answering the Call

Leading, ground-breaking projects should deal with complex, demanding, innovative simulations that would not be possible without the GCS infrastructure, and which can benefit from the exceptional resources provided by GCS. Application for a large-scale project must be done by filling in the appropriate electronic application form that can be accessed from the GCS web page :

https://www.gauss-centre.eu/for-users/hpc-access/

Please use the template for the project description of your GCS large-scale application which can be reached from the above web page and are provided in pdf, docx, and LaTeX format.

Note that also the regular application forms of the GCS member centres can be reached from there.

Please note:

- Projects with a running large-scale grant must clearly indicate and justify this.

- Projects targeting multiple GCS platforms must clearly indicate and justify this.

- Projects applying for an extension must clearly indicate the differences to the previous applications in the project description and must have submitted their reports of the previous application.

- Accepted large-scale projects must fulfil their reporting obligations.

- Project descriptions must not exceed 18 pages.

- Grants from or applications to all German computing centres and PRACE/EuroHPC have to be reported in the online application forms.

The proposals for large-scale projects will be reviewed with respect to their technical feasibility and peer-reviewed for a comparative scientific evaluation. On the basis of this evaluation by a GCS committee the projects will be approved for a period of one year and given their allocations.

Criteria for decision

Applications for compute resources are evaluated only according to their scientific excellence and technical feasibility.

- The proposed scientific tasks must be scientifically challenging, and their treatment must be of substantial interest.

- Clear scientific goals and verifiable milestones on the way to reach these goals must be specified.

- The implementation of the project must be technically feasible on the available computing systems, and must be in reasonable proportion to the performance characteristics of these systems.

- The Principal Investigator must have a proven scientific record, and she/he must be able to successfully accomplish the proposed tasks. In particular, applicants must possess the necessary specialized know-how for the effective use of high-end computing systems. This has to be proven in the application for compute resources, e.g. by presenting work done on smaller computing system, scaling studies etc.

- The specific features of the high-end computers should be optimally exploited by the program implementations. This will be checked regularly during the course of the project.

Further information:

- FactSheet-GCS-SUPERMUC-NG.pdf

- For further help please contact the member sites via https://www.gauss-centre.eu/service/contact/.

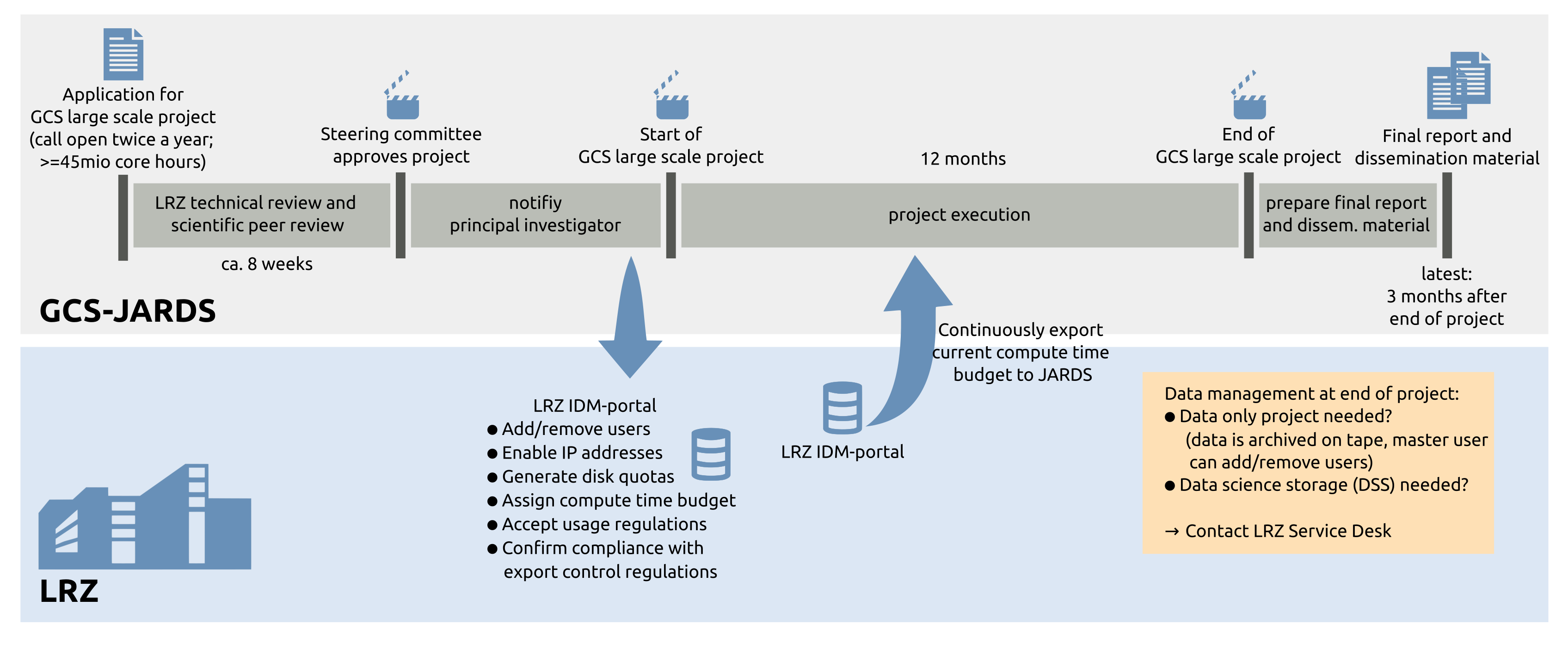

Application process

The image shows the process of applying for a GCS large scale project on SuperMUC-NG. All applications for projects on SuperMUC are managed through the GCS-JARDS tool, hosted by GCS, the Gauss Centre for Supercomputing. After finalizing your application within the GCS-JARDS website, you will be asked to print out and sign the "Principal Investigator’s Agreement for Access to HPC resources". Scan the signed document and email it to HPC-Benutzerverwaltung@lrz.de. Once your project is approved, your SuperMUC-NG project will be created by LRZ. GCS-JARDS is used to manage the review process, collect status reports, final reports, dissemination material, and to manage project extensions.

Address of the GCS-Coordination Office for Large Scale Calls

GCS-Coordination Office

Jülich Supercomputing Centre

Forschungszentrum Jülich

52425 Jülich

Germany

E-mail: coordination-office@gauss-centre.eu