Decommisioned CoolMUC-3

System Overview

Hardware | ||

Number of nodes | 148 | |

Cores per node | 64 | |

Hyperthreads per core | 4 | |

Core nominal frequency | 1.3 GHz | |

Memory (DDR4) per node | 96 GB (Bandwidth 80.8 GB/s) | |

High Bandwidth Memory per node | 16 GB (Bandwidth 460 GB/s) | |

Bandwidth to interconnect per node | 25 GB/s (2 Links) | |

Number of Omnipath switches (100SWE48) | 10 + 4 (je 48 Ports) | |

Bisection bandwidth of interconnect | 1.6 TB/s | |

Latency of interconnect | 2.3 µs | |

Peak performance of system | 459 TFlop/s | |

Infrastructure | ||

|---|---|---|

Electric power of fully loaded system | 62 kVA | |

Percentage of waste heat to warm water | 97% | |

Inlet temperature range for water cooling | 30 … 50 °C | |

Temperature difference between outlet and inlet | 4 … 6 °C | |

Software (OS and development environment) | ||

Operating system | SLES12 SP2 Linux | |

MPI | Intel MPI 2017, alternatively OpenMPI | |

Compilers | Intel icc, icpc, ifort 2017 | |

Performance libraries | MKL, TBB, IPP | |

Tools for performance and correctness analysis | Intel Cluster Tools |

The performance numbers in the above table are theoretical and cannot be reached by any real-world application. For the actually observable memory bandwidth of the high bandwidth memory, the STREAM benchmark yield approximately 450 GB/s per node, and the commitment for the LINPACK performance of the complete system was 255 TFlop/s.

Many-core Architecture, the “Knights Landing” Processor

The processor generation installed in this system was the first from Intel to be stand-alone; previous generations were only available as accelerators, which greatly added to the complexity and effort required to provision, program, and use such systems. The LRZ Cluster, in contrast, could be installed with a standard Operating System, and used with the same programming models familiar to users of Xeon-based clusters. The high speed interconnect between the compute nodes was realized by an on-chip interface and a mainboard-integrated Dual-port adapter, with advantage for latency-bound parallel applications, in comparison to PCI-card interconnects.

A further feature of the architecture was the closely-integrated MCDRAM, also known as “High-Bandwidth Memory” (HBM). The bandwidth of this memory area was an order of magnitude higher than that of conventional memory technology at the time. It could either be configured as cache memory, directly-addressable memory, or a 50/50 hybrid of the two types. The vector units had also been expanded – each core contained two AVX-512 VPUs and could therefore, when multiplication and addition operations are combined, perform 32 double-precision floating-point operations per cycle. Each pair of cores were tightly coupled and shared a 1MB L2 cache to form a “tile”, and 32 tiles shared a 2-dimensional interconnect with a bisection bandwidth of over 700GB/s over which cache coherence and traffic flow in various user-configurable modes. However, a reboot of the affect nodes was required to change configurations, limiting pratical use.

Because of the low core frequency as well as the small per-core memory of its nodes, the system was not suited for serial throughput load, even though the instruction set permit execution of legacy binaries. For best performance, a significant optimization effort for existing parallel applications had to be undertaken. To make efficient use of the memory and exploit all levels of parallelism in the architecture, typically a hybrid approach (e.g. using both MPI and OpenMP) was considered a best practice. Restructuring of data layouts was often required in order to achieve cache locality, a prerequisite for effectively using the broader vector units. For codes that require use of the distributed memory paradigm with small message sizes, the integration of the Omnipath network interface on the chip set of the computational node could bring a significant performance advantage over a PCI-attached network card.

LRZ had acquired know-how throughout the past three years in optimizing for many-core systems by collaborating with Intel. This collaboration included tuning codes for optimal execution on the previous-generation “Knight’s Corner” accelerator cards used in the SuperMIC prototype system; guidance on how to do such optimization was documented on the LRZ web server, and supplied on a case-by-case basis by the LRZ application support staff members. The Intel development environment (“Intel Parallel Studio XE”) that included compilers, performance libraries, an MPI implementation and additional tuning, tracing and diagnostic tools, assisted programmers in establishing good performance for applications. Courses on programming many-core systems as well as using the Intel toolset were regularly scheduled within the LRZ course program.

Omnipath Interconnect

With their acquisition of network technologies in the last decade, Intel had chosen a new strategy for networks, namely the integration of the network into the processor architecture. The LRZ CoolMUC-3 cluster was the first time the Omnipath interconnect, in its already mature first generation, was put into service at the LRZ. It was identified by its markedly lower application latencies, higher achievable message rates, and high aggregate bandwidths at a better price than competing hardware technologies. The LRZ used this system to gather experience with the management, stability, and performance of the new technology.

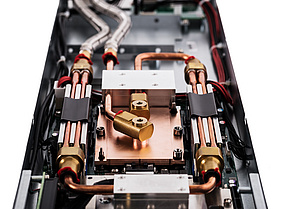

Cooling Infrastructure

LRZ was already a pioneer in the introduction of warm-water cooling in Europe. CooLMUC-1, installed in mid-2011 by MEGWare, was the first such system at the LRZ. A little more than year later, the IBM/Lenovo 3-PFlop system SuperMUC went into service, the first system that allowed an inlet temperature of around 40°C, in turn allowing year-round chillerless cooling. Furthermore, the high water temperatures allowed this waste energy to be captured in the form of additional cooling power for the remaining air-cooled and cool-water-cooled components (e.g. storage) – in 2016, after a detailed pilot project in collaboration with the company Sortech, an Adsorption-cooling system was put into service for the first time, converting roughly half of the Lenovo Cluster’s waste heat into cooling capacity.

CooLMUC-3 took the next step in improving energy efficiency. Via the introduction of water cooling for additional components like power supplies and network components, it was possible to thermally insulate the racks and virtually eliminate the emission of heat (only 3% of the electrical energy) to the server room.

CoolMUC3 Features

| Purpose of feature | Value to be specified | Effect |

| Select cluster mode | quad | "quadrant"; affinity between cache management and memory. Recommended for everyday use. In certain shared memory model workloads where application can use all the cores in a single process using a threading library like OpenMP and TBB, this mode can also provide better performance than Sub-NUMA clustering mode. |

| Select cluster mode | snc4 | "Sub-NUMA clustering"; affinity between tiles, cache management and memory. NUMA-optimized software can profit from this mode. This mode is suitable for distributed memory programming models using MPI or hybrid MPI-OpenMP. Proper pinning of tasks and threads is essential |

| Select cluster mode | a2a | "alltoall"; no affinity between tiles, cache management and memory. Not recommended because performance is degraded |

| Select memory mode | flat | High bandwidth memory is operated as regular memory mapped into the address space. Note: due to SLURM limitations, the maximum available memory per node for a job will still only be 96 GBytes. The usage of "numactl -p" or Memkind library is recommended. |

| Select memory mode | cache | High bandwidth memory is operated as cache to DDR memory. |

| Select memory mode | hybrid | High bandwidth memory is evenly split between regular memory (8GB) and cache (8 GB) |