Workshop: SuperMUC-NG Status and Results Workshop 2021

8.-10. June, 2021

Content

Due to the Covid-19 pandemic, the workshop was a fully virtual event. This gave us the opportunity to extend the workshop to full three days, which covered:

- Scientific talks during the morning sessions: 25 projects presented their work in 15min talks, followed by 5min Q&A. Selected talks are also available on LRZ’s YouTube channel.

- Extended User Forum in the afternoons: LRZ colleagues presented services and upcoming developments in the field of HPC@LRZ. For each topic, a short presentation was given, followed by an extended discussion to receive user feedback.

The science presented at the workshop and more exciting HPC results obtained on SuperMUC Phase1, SuperMUC Phase2, and SuperMUC-NG are collected in the latest book https://doku.lrz.de/display/PUBLIC/Books+with+results+on+LRZ+HPC+Systems, which can be downloaded free of charge.

Organizer

Dr. Helmut Brüchle

Agenda

Tuesday, June 8, 2021

| Time (CEST) | Talk Title | Speaker | Abstract |

|---|---|---|---|

| 9:30 | Welcome Zoom Netiquette | Dieter Kranzlmüller Volker Weinberg | |

| 9:40 | Volker Springel Max-Planck-Institute for Astrophysics, Garching | ||

| 10:10 | Talk 2: Numerical investigations of cavitating multiphase flows | Theresa Trummler Institute of Aerodynamics and Fluid Mechanics, Department of Mechanical Engineering, TUM | We numerically investigate cavitation phenomena using the compressible flow solver CATUM. Recent research has focused on numerical cavitation erosion prediction and cavitation phenomena in injector nozzles. The presentation includes results from bubble collapse studies, high-resolution simulations of a cavitating jet, and large-eddy simulations of a cavitating nozzle flow with subsequent injection into a gaseous ambient. |

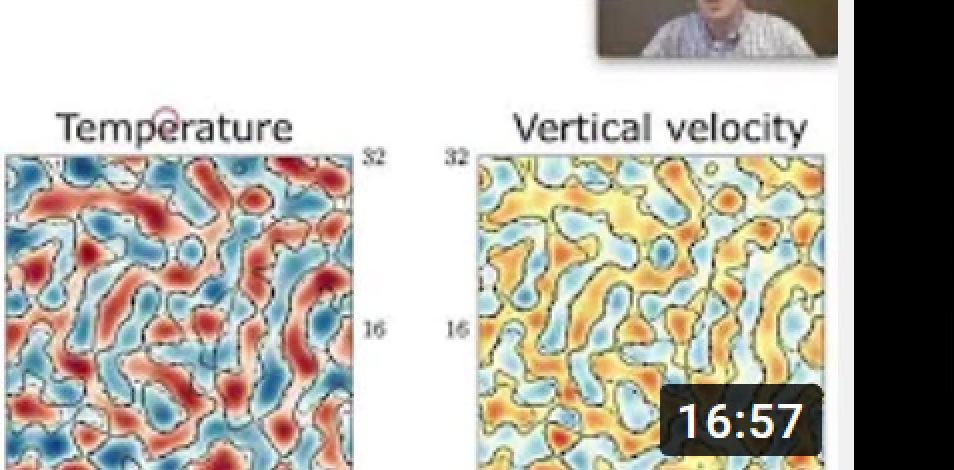

| 10:30 | Dmitry Krasnov Technische Universität Ilmenau, Department of Mechanical Engineering, Fluid Mechanics Group | Turbulent convection is an essential process to transport heat across a fluid layer or closed domain. In many of the astrophysical or technological applications of convection the working fluid is characterized by a very low dimensionless Prandtl number Pr = ν/κ which relates the kinematic viscosity to temperature diffusivity. Two important cases are (i) turbulent convection in the outer shell of the Sun at Pr ∼ 1e−6 in the presence of rotation, radiation, magnetic fields, and even changes of the chemical composition close to the surface and (ii) turbulent heat transfer processes in the cooling blankets of nuclear fusion reactors at Pr ∼ 1e−2. These are rectangular ducts, which are exposed to very strong magnetic fields that keep the 100 million Kelvin hot plasma confined. Our understanding of the complex interaction of turbulence with the other physical processes in these two examples is still incomplete. In this project we present high-resolution direct numerical simulations for the simplest setting of a turbulent convection flow, Rayleigh-Bénard convection in a layer and a straight duct that is uniformly heated from below and cooled from above. The simulations help to reveal some of these aspects at a reduced physical complexity and to discuss the basic heat transfer mechanisms that have many of these applications in common. | |

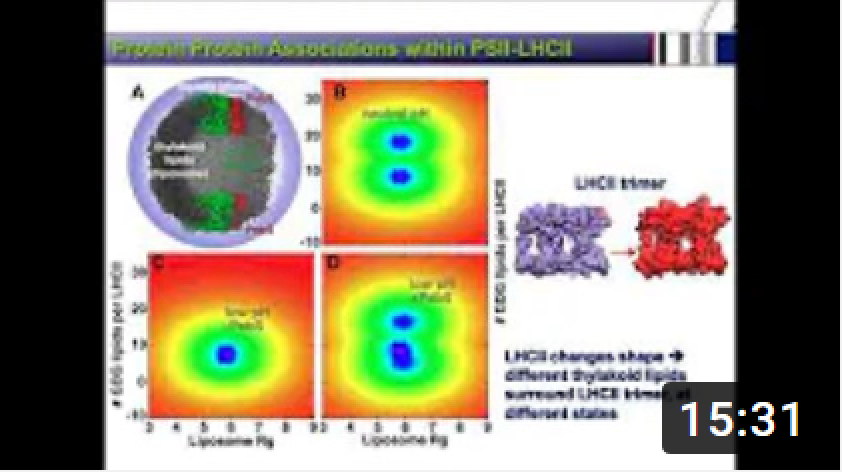

| 10:50 | Vangelis Daskalakis Department of Chemical Engineering, Cyprus University of Technology | Higher plants exert a delicate switch between light harvesting and photoprotective modes within the Light Harvesting Complexes (LHCII) of Photosystem II (PSII). The switch is triggered at an excess ΔpH across the thylakoid membranes that also activates the photoprotective PSII subunit S (PsbS). PsbS has been proposed to act like a seed for the LHCII aggregation/ clustering. Understanding the mechanism by which this process occurs is crucial for crop and biomass yields. Here we have run large scale Molecular Simulations of many copies of coarse-grained major LHCII trimer at low, or excess ΔpH, also in complexation with different amounts of PsbS within the thylakoid membrane. The potential of the different LHCII states to cluster together has been probed in terms of free-energies involved employing the Parallel-Tempering Metadynamics method at the Well-Tempered ensemble (PTmetad-WTE). A sequence of events is revealed that leads from the excess ΔpH induction, to LHCII-PsbS aggregation, under photoprotection. | |

| 11:10 - 11:30 | Coffee break | ||

| Time (CEST) | Talk Title | Speaker | Abstract |

|---|---|---|---|

| 11:30 | Richard Stevens Max Planck Center Twente for Complex Fluid Dynamics and Physics of Fluids Group, University of Twente | Research on thermal convection is relevant to better understand astrophysical, geophysical, and industrial applications. We aim to study thermal convection in asymptotically large systems and at very high Rayleigh Ra numbers. In this talk we discuss simulations on very large aspect ratio systems and high Ra and discuss the flow organization in so-called thermal superstructures. In experiments the highest Ra number has been obtained using cryogenic helium or pressurized gasses. Although these experiments are performed in geometrically similar devices the results are different for Ra>5x10^11. We want to use controlled simulations to systematically study this high Ra number regime. So far, the highest Ra number simulation is for Ra=2x10^12 and in this project we perform simulations at Ra=10^13, which allows one-to-one comparison with experiments performed in the Göttingen U-Boat experiment on the onset to ultimate thermal convection. | |

| 11:50 | Leonhard Rannabauer Department of Informatics, TUM | ||

| 12:10 | Talk 7: Kinetic Simulations of Astrophysical and Solar Plasma Turbulence | Daniel Groselj Columbia University, New York | Using state-of-the-art computing resources of SuperMUC-NG, we perform massively parallel kinetic simulations of microscale turbulence in astrophysical and space plasmas. This includes, in particular, the study of magnetic reconnection in small-scale turbulent fields and microturbulence upstream of relativistic shocks with mixed electron-ion-positron particle compositions. The latter is the subject of this talk. The motivation for the study is provided by external shocks in gamma-ray bursts (GRBs). How exactly the structure, and the resulting particle acceleration, of external GRB shocks depends on the particle composition has been so far largely unknown. We show that even moderate changes in the amounts of electron-positron pairs per ion significantly impact the nature of the self-generated microturbulence upstream of the shock. These findings have important implications for the modeling of the early afterglow emission of GRBs and place additional constraints on the maximum value of external magnetization that allows for particle acceleration. |

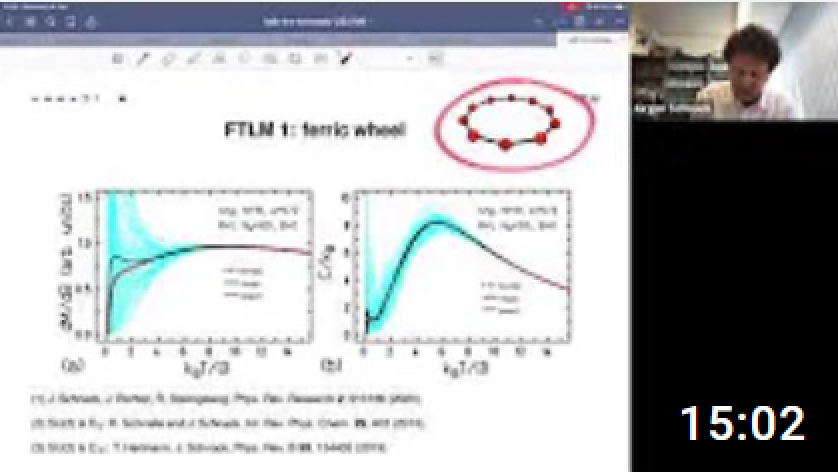

| 12:30 | Jürgen Schnack Universität Bielefeld, Fakultät für Physik | The kagome lattice antiferromagnet is a paradigmatic quantum spin system that exhibits many unusual properties. In my contribution I am going to explain how we could approximately approach thermodynamic observables such as magnetization and heat capacity and how we model the phenomenon of magnon crystallization close to the saturation field. Mathematically these problems constitute huge matrix-vector calculations for which supermuc-ng turned out to be an ideal platform. | |

| 12:50 - 15:00 | Lunch break | ||

| 15:00 | GCS EuroHPC activities and GCS smart scaling strategy | Claus-Axel Müller (GCS) | GCS is Germany’s leading supercomputing institution. It is a non-profit organization, combining the three national supercomputing centres—HLRS, JSC, and LRZ into Germany’s most powerful supercomputing organization. Its world-class HPC resources are openly available to national and European researchers from academia and industry. This session will give an overiew of the main EuroHPC activities and the GCS smart scaling strategy. |

| 15:30 | Future Computing@LRZ | Josef Weidendorfer | The "Future Computing Group" is a new team at LRZ which drives efforts towards a better understanding of any sensible architecture options fur future HPC systems. In this talk, I will present the challenges, objectives and steps planned. To be successful, we require input from you, our LRZ users. Please take part in our questionnaire and send us a short message to "fc@lrz.de" if you can share more details about your code and demands with us. |

| 16:00 - 17:00 | SuperMUC-NG Phase 2 | Gerald Mathias Michael Steyer, Jeff McVeigh (Intel) | Colleagues from LRZ and Intel will present an outlook on the SuperMUC-NG Phase 2 system. We will discuss the configuration, software environment, and programming models. |

Wednesday

| Time (CEST) | Talk Title | Speaker | Abstract |

|---|---|---|---|

| 9:30 | Hans-Thomas Janka & Robert Bollig Max-Planck-Institut fuer Astrophysik, Garching | Over the past years the PI's group at MPA/Garching could demonstrate, with computing resources on SuperMUC, that the neutrino-driven mechanism can, in principle, succeed in driving the supernova explosions of massive stars in three dimensions. However, two of the still unsolved questions could be addressed only by our recent projects: How can the resistance against explosion be overcome in collapsed stars of considerably more than 10 solar masses? Can the neutrino-driven mechanism explain energies as observed in typical core-collapse supernovae? In GAUSS project pr53yi we could show that the inclusion of muon formation in the hot nuclear medium of the new-born neutron star as well as the presence of large-scale perturbations in the convectively burning oxygen shell of the pre-collapse star decisively foster the onset of the supernova explosion. In LRZ project pn69ho we achieved to follow the neutrino heating for more than 7 seconds and could demonstate for the first time that the explosion energy gradually grows to the canonical value of 1 B = 1 bethe = 10^{44} Joule over such long periods of evolution. These computationally challenging calculations, which demanded considerably more computing power than our previous simulations, became possible because of SuperMUC-NG connected performance gains in combination with crucial improvements in the numerical implementation of the underlying physical model. The talk reports the essential results and the relevant modeling aspects. | |

| 10:10 | Josef Hasslberger Numerical methods in Aerospace Engineering, Bundeswehr University Munich | The statistical behaviours of the invariants of the velocity gradient tensor and flow topologies for Rayleigh-Bénard convection of Newtonian fluids in cubic enclosures have been analysed using Direct Numerical Simulations (DNS) for a range of different values of Rayleigh (i.e. Ra=10^7−10^9) and Prandtl (i.e. Pr=1 and 320) numbers. The behaviours of second and third invariants of the velocity gradient tensor suggest that the bulk region of the flow at the core of the domain is vorticity-dominated whereas the regions in the vicinity of cold and hot walls, in particular in the boundary layers, are found to be strain rate-dominated and this behaviour has been found to be independent of the choice of Ra and Pr values within the range considered here. Accordingly, it has been found that the focal topologies S1 and S4 remain predominant in the bulk region of the flow and the volume fraction of nodal topologies increases in the vicinity of the active hot and cold walls for all cases considered here. However, remarkable differences in the behaviours of the joint probability density functions (PDFs) between second and third invariants of the velocity gradient tensor (i.e. Q and R) have been found in response to the variations of Pr. The classical teardrop shape of the joint PDF between Q and R has been observed away from active walls for all values of Pr, but this behavior changes close to the heated and cooled walls for high values of Pr (e.g. Pr=320) where the joint PDF exhibits a shape mirrored at the vertical Q-axis. It has been demonstrated that the junctions at the edges of convection cells are responsible for this behaviour for Pr=320, which also increases the probability of finding S3 topologies with large negative magnitudes of Q and R. By contrast, this behaviour is not observed in the Pr=1 case and these differences between flow topology distributions in Rayleigh–Bénard convection in response to Pr suggest that the modelling strategy for turbulent natural convection of gaseous fluids may not be equally well suited for simulations of turbulent natural convection of liquids with high values of Pr. | |

| 10:30 | Talk 11: Direct numerical simulation of premixed flame-wall interaction in turbulent boundary layers | Umair Ahmed Newcastle University, School of Engineering | |

| 10:50 | Talk 12: Supercritical Water is not Hydrogen Bonded | Jan Noetzel Ruhr-Universität Bochum | The structural dynamics of Liquid water are primarily governed by the tetrahedral hydrogen bond network, responsible for many unique properties (so-called "anomalies") of water. Here we challenge the common assumption that this feature also dominates the supercritical phase of water employing extensive ab initio molecular dynamics simulations. A key finding of our simulations is that the average hydrogen bond lifetime is smaller than the intermolecular water-water vibration oscillation period. This observation implies that two water molecules perform low-frequency intermolecular vibrations irrespective if there is an intact hydrogen bond between them or not. Moreover, the very same vibrations can be qualitatively reproduced by isotropic interactions, which lets us conclude that the supercritical water phase should not be considered an H-bonded fluid. |

| 11:10 - 11:30 | Coffee break | ||

| Time (CEST) | Talk Title | Speaker | Abstract |

|---|---|---|---|

| 11:30 | Talk 13: Interplay between lipids and phosphorylation allosterically regulates interactions between β2-adrenergic receptor and βarrestin-2 | Kristyna Pluhackova ETH Zürich / Universität Stuttgart | G protein-coupled receptors (GPCRs) are target of almost 40% of all drugs today. These drugs (e.g. betablockers, opioid painkillers and many others), bind to the extracellular binding pocket of the receptors and regulate their activation state. By that they direct the binding of the intracellular binding partners, which then trigger signals in the cell. Since recently, the concept of modulation of GPCR activity by lipids is emerging. Here, we went one step further and by means of atomistic molecular dynamics simulations, we have unraveled the interplay between membrane lipids and phosphorylation state of the receptor on its activation state as well as on the complex formation with arrestin. Arrestin, one of the GPCR-intracellular binding partners, is responsible for receptor desensitisation and removal from the cell membrane. The acquired results build a solid basis for further investigations of the regulation mechanisms of extracellular signal transmission to the cell. We were able to pinpoint residues that are important for stabilization of the arrestin/receptor complex, thus opening possible new pathways for pharmacological intervention. |

| 11:50 | Andreas Schäfer Institut fuer Theoretische Physik, Universitaet Regensburg | One of the fundamental tasks of particle physics is to better understand the extremely complicated quark-gluon structure of hadrons like the proton. For example, such knowledge is relevant if one wants to interpret reliably the processes occuring in proton-proton collisions at the Large Hadron Collider at CERN. Because the QCD interaction is extremely strong and the proton is a highly entangled quantum state, usage of non-perturbative techniques, in particular Lattice QCD (LQCD), is indispensable. In fact, LQCD is nowadays treated at the same footing as direct exerimental evidence and answers many questions which experiment cannot. | |

| 12:10 | Talk 15: Scalable Multi-Physics with waLBerla | Harald Köstler Friedrich-Alexander-Universität Erlangen-Nürnberg | The open-source massively parallel software framework waLBerla (widely applicable lattice Boltzmann from Erlangen) provides a common basis for stencil codes on structured grids with special focus on computational fluid dynamics with the lattice Boltzmann method (LBM). Other codes that build upon the waLBerla core are the particle dynamics module MESA-PD and the finite element framework HyTeG. We present a number of applications that have been recently run on SuperMUC-NG using our software framework. These include solidification, turbulent flows, sediment beds, and fluid particle intercation phenomena. |

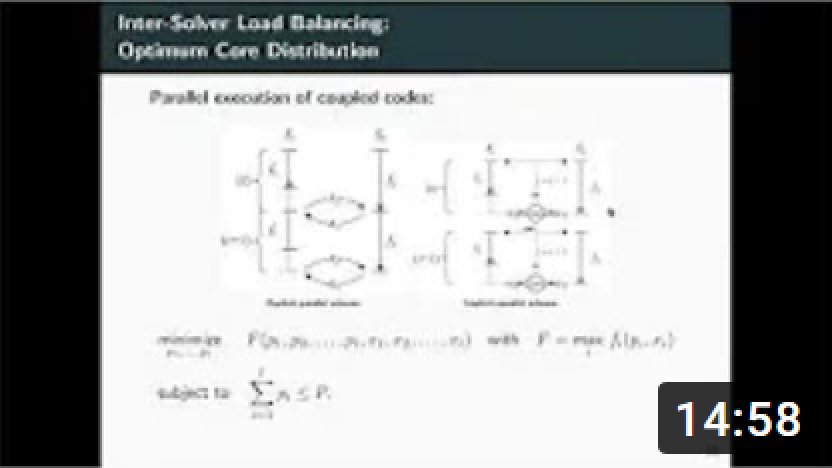

| 12:30 | Amin Totounferoush University of Stuttgart, Institute for Parallel and Distributed Systems | We present a data driven approach for inter-code load balancing in large-scale partitioned multi-physics/multi-scale simulations. We follow a partitioned approach, where we use separate codes for different physical phenomena. We incorporate preCICE library for technical and numerical coupling. The proposed scheme aims to improve the performance of the coupled simulations. Performance Model Normal Form (PMNF) regression is considered to find an empirical performance model for each solver. Then, an appropriate optimization problem is derived and solved to find the optimal compute resource distribution between solvers. To prove the effectiveness of the proposed method, we present numerical scalability and performance analysis for a real world application.We show, that the load imbalance is almost removed (less than 2%). In addition, due to the optimal usage of computation capacity, the proposed method considerably reduces the simulation time. | |

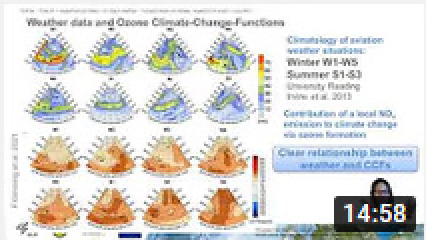

| 12:50 | Sigrun Matthes DLR – Institut für Physik der Atmosphäre, Oberpfaffenhofen | The Modular approach in the Earth-System Chemistry Climate model EMAC/MECO(n) allows to efficiently develop experiment design of multi-scale global numerical simulations with adapted complexity. Results from numerical simulations are shown where the model system is used to study air quality and climate impacts of road traffic and aviation which are caused by CO2 emissions and non-CO2 effects. Lagrangian transport schemes allows to generate MET products for air traffic management when working towards climate-optimized aircraft trajectories. Comprehensive multi-scale global-region climate chemistry simulations allow to quantify reductions of tropospheric ozone levels and improvement of air quality when evaluating effectiveness of mitigation strategies. Growing societal needs arising from a changing atmosphere require a further improved understanding in climate science and lead to challenges in HPC computing relating to future Earth-System Climate Chemistry modelling. | |

| 13:10 - 14:30 | Lunch break | ||

| Time (CEST) | Abstract | ||

| 14:30 | Compute Cloud@LRZ | Niels Fallenbeck | The LRZ Compute Cloud is a computational service provided by LRZ aiming at providing resources quickly and hassle-free. This talk presents this Infrastructure-as-a-Service offering briefly and will cover the basic information on the characteristics, the hardware, and the limitations of the cloud. This will cover

In the following discussion questions about the usage of the compute cloud can be addressed as well as particular use cases can be discussed. |

| 15:00 | Mentoring of HPC projects@LRZ | André Kurzmann | Starting with the operation of SuperMUC-NG, LRZ and the Gauss Centre for Supercomputing (GCS) offer enhanced support by providing a dedicated mentor for all Large Scale Gauss projects. Mentoring addresses the needs of both, users and the GCS centres. The GCS Mentor

The user profits from a more efficient and personalized support structure for his or her project while the three GCS centres benefit from more efficient use of valuable HPC resources. Please consult the GCS website for more information: https://www.gauss-centre.eu/for-users/user-services-and-support/ |

| 15:30 | Managing HPC Application Software with SPACK@LRZ | Gilbert Brietzke |

|

Thursday

| Time (CEST) | Talk Title | Speaker | Abstract |

|---|---|---|---|

| 9:30 | Talk 18: Hadron structure observables on a fine lattice at the physical point | Sara Collins University of Regensburg | |

| 9:50 | Michael Manhart Professur für Hydromechanik, Technische Universität München | In this project the flow in partially-filled pipes is investigated. This flow can be seen as a model flow for rivers and waste-water channels and represents a fundamental flow problem that is not yet fully understood. Nevertheless, there have neither been any high-resolution simulations nor well resolved experiments reported in literature to date for this flow configuration. In this project highly resolved 3D-simulations are performed which help further understanding narrow open-duct flows. The analysis concentrates on the origin of the mean secondary flow and the role of coherent structures as well as on the time-averaged and instantaneous wall shear stress. | |

| 10:10 | Talk 20: Numerical Investigation of Cooling Channels with Strong Property Variations | Alexander Döhring Institute of Aerodynamics and Fluid Mechanics, Department of Mechanical Engineering, TUM | We present well-resolved large-eddy simulations (LES) of a channel flow solving the fully compressible Navier-Stokes equations in conservative form. An adaptive look-up table method is used for thermodynamic and transport properties. A physically consistent subgrid-scale turbulence model is incorporated, that is based on the Adaptive Local Deconvolution Method (ALDM) for implicit LES. The wall temperatures are set to enclose the pseudo-boiling temperature at a supercritical pressure, leading to strong property variations within the channel geometry. The hot wall at the top and the cold wall at the bottom produce asymmetric mean velocity and temperature profiles which result in different momentum and thermal boundary layer thicknesses. Different The perfect gas assumption is not valid in transcritical flows at the pseudo-boiling position, thus, a enthalpy based Turbulent Prandtl number formulation has to be used. |

| 10:30 | Emanuele Coccia Department of Chemical and Pharmaceutical Sciences, University of Trieste | Photocatalysis is an outstanding way to speed up chemical reactions through replacing of heat by light as physical promoter. Plasmonic metallic nanostructures are photocatalytically active. In thermocatalytic experiments, carbon dioxide hydrogenation catalysed by rhodium nanocubes produce a mixture of methane and carbon monoxide. When irradiated by light, rhodium nanotubes efficiently enhance the selectivity towards methane formation only. This selectivity could be induced by plasmon effects, with the generation of hot electrons in the nanostructure, which are in turn injected in the CHO reaction intermediate species, favouring the formation of methane. Goal of the project is to simulate the electron dynamics occurring in the experimental conditions, when an electromagnetic pulse interacts with the CHO adsorbed on the nanocube. Our approach is a multiscale quantum/classical scheme formulated in time domain: CHO and two layers of rhodium atom (which describe a vertex of the nanocube) have been treated at quantum level of theory, whereas the rest of the nanostructure is represented by means of a polarisable continuum model. Propagation of time-dependent Schrödinger equation has been also coupled to the theory of open quantum systems, to include (de)coherence as a further design element in plasmon-mediated photocatalysis. Analysis of electron dynamics occurring under the influence of an external pulse, plasmon effects and electronic dephasing/relaxation has been carried out resorting to recently developed time-resolved descriptors. | |

| 10:50 | Jon McCullough University College London | Simulation of blood flow in 3D at the scale of a full human is a challenge that demands the use of a performant code being deployed on large-scale HPC resources. The HemeLB code is a fluid flow solver based on the lattice Boltzmann method and has been optimised to simulate flow in the sparse, complex geometries characteristic of vascular domains. Here we discuss the observed performance and results achieved with HemeLB on SuperMUC-NG in pursuit of simulating blood flow for a virtual human. | |

| 11:10 - 11:30 | Coffee break | ||

| Time (CEST) | Talk Title | Speaker | Abstract |

|---|---|---|---|

| 11:30 | Talk 23: Massively Parallel Simulations of Fully Developed Turbulence | Michael Wilczek Max Planck Institute for Dynamics and Self-Organization | Turbulence is virtually everywhere. For example, it governs our atmosphere, our oceans as well as their interaction. Due to the inherently random character of turbulence, predictive theories of turbulence necessarily have to be of statistical nature. Developing such theories, which ultimately all modeling applications rely on, remains an outstanding scientific challenge. From a physics perspective, fully developed turbulence constitutes a paradigmatic problem of nonequilibrium statistical mechanics with a large number of strongly interacting degrees of freedom. The challenge of developing a statistical theory of turbulence arises from multi-scale flow structures, which introduce long-range correlations giving rise to complex, scale-dependent statistics. Numerical simulations can provide key insights into the emergence of these structures, their dynamics, and the resulting statistics. In this presentation, I will summarize our recent work along these lines enabled by simulations on SuperMUC-NG. |

| 11:50 | Enrico Garaldi Max-Planck-Institut fuer Astrophysiks, Garching | The study of the first galaxies in the Universe is the new frontier in both the fields of galaxy formation and cosmic reionization. These primeval cosmic objects formed when the conditions in the Universe were very different from today, constitute the building blocks of the cosmic structures populating our Universe, and powered the last global phase transition of the Universe, transforming the inter-galactic medium (IGM, the diffuse gas between galaxies) from cold and neutral into a hot and highly-ionised plasma, in what is known as Cosmic Reionization. I will present the Thesan simulation suite, a recent numerical effort providing a comprehensive view of unprecedented physical fidelity focused on the assembly of the first galaxies and their impact on the largest scales in the Universe. After reviewing the physical and numerical approach followed, I will show some of the preliminary results from these simulations and review the technical challenges that were addressed in the development of this project. | |

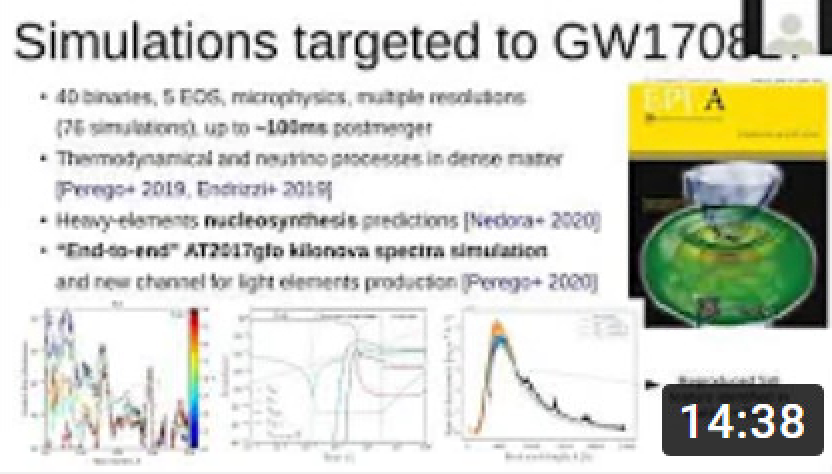

| 12:10 | Sebastiano Bernuzzi FSU Jena | # Binary neutron star mergers: simulation-driven models of gravitational-waves and electromagnetic counterparts This talk reports on the GCS Large-Scale project pn56zo on SuperMUC at LRZ conducted by the Jena-led Computational Relativity (CoRe) collaboration. The project developed (3+1)D multi-scale and multiphysics simulations of binary neutron mergers in numerical general relativity for applications to multi-messenger astrophysics. Broken into four subprojects, it focused on two main aspects: (i) the production of high-quality gravitational waveforms suitable for template design and data analysis, and (ii) the investigation of merger remnants and ejecta with sophisticated microphysics, magnetic-fields induced turbulent viscosity and neutrino transport schemes for the interpretation of kilonova signals. The simulations led to several breakthroughs in the first-principles modeling of gravitational-wave and electromagnetic signals, with direct application to LIGO-Virgo's event GW170817 and counterparts observations. The HPC resources at LRZ supported a major simulation effort in the field; all data products are (or are being) publicly released to the astrophysics community for further use. | |

| 12:30 | Talk 26: Nucleon structure from simulations of QCD with physical quark masses | Jacob Finkenrath Computation-based Science and Technology Research Center, The Cyprus Institute | |

| 12:50 - 14:00 | Lunch break | ||

| 14:00 | Education and Training | Volker Weinberg | LRZ provides first-class education and training opportunities for the national and European HPC community. We regularly offer education and training on topics such as Data Analytics, Deep Learning and AI, HPC, Optimisation, Programming Languages, Quantum Computing, Application Software, System and Internet Security. Since 2012, LRZ as part of the Gauss Centre for Supercomputing (GCS) is one of currently 14 Training Centres of the Partnership for Advanced Computing in Europe (PRACE) which offer HPC training for researchers from academia and industry from all around Europe. Since 2020, LRZ as part of GCS is also one of 33 National Competence Centres (NCCs) within the new EuroCC project supported by the EuroHPC Joint Undertaking (JU). We will give an overview of the education and training activities offered and planned by LRZ, GCS, PRACE and EuroCC. During the session we would like to hear from you which topics and course formats you would like us to offer in future. |

| 14:30 | Data Storage: DSS, Archive and future plans | Stephan Peinkofer | In the first part of this talk we'll focus on a "hidden feature" of SuperMUC-NG, the LRZ Data Science Storage or DSS which is LRZ’s batteries included approach to satisfy the demands of data intensive science. We will discuss what you can do with DSS and how your SuperMUC-NG projects can make use of it. In the second part we’ll introduce the next generation of the HPC Archive System, called Data Science Archive or DSA and we will show you that the similarity of the name to DSS is no coincidence. For all those of you who feel overwhelmed by all the storage options on SuperMUC-NG, we’ll close the session with a special gift for you. |

| 15:00 | Research Data Management and Workflow Control Slides will be made available | Stephan Hachinger | In this talk, we will present you the LRZ plans for Research Data Management add-ons. These add-ons will, according to recent planning, augment the LRZ storage services with possiblities to get Digital Object Identifiers (DOIs) for your data, to hold basic metadata, to present data products via a Zenodo-like portal, and to have data at LRZ indexed by search engines. The resulting Research Data Management service should enable you to comply to the "FAIR" (Findable, Accessible, Interoperable, Reusable) philosophy of modern resarch data management, and to respective requirements of funding agencies or your institutes. They shall also help you with internal data reuse. Initially, the prospective service offering is aimed at data you would like to provide openly (i.e. data without embargo). Furthermore, we will present some ideas from the LEXIS H2020 project (GA 825532) on how research data and mixed Cloud-HPC workflows can be managed together. We aim for a lively discussion on all these ideas. |

| 15:30 | Quantum Computing@LRZ | Luigi Iapichino | In my talk I will describe the strategy of LRZ in the novel field of Quantum Computing, starting from its mission as supercomputing centre and research enabler for the academic user community in Bavaria and Germany. I will review the four directions of our QC plan: on-premise quantum systems, practical QC services, HPC/QC integration (including software and hardware simulators) and user community education. Our first services and projects in this field will be presented. |

| 16:00 - 16:30 | Developing BDAI at LRZ by the Example of BioMed Projects | Nicolay Hammer Peter Zinterhof | As LRZ being was announced as Bavarian Big Data Competence Centre in 2018, a new team was established during the following months. It eventually lead to formation of LRZ’s ‘Big Data and Artificial Intelligence Team’ (BDAI team) in March 2019. It has currently 7 members who are working and tackling challenges in different BDAI related topics fields. We will shortly introduce the team and its mission and exemplify challenges to improve and develop BDAI services at LRZ. We will then dive in greater details into examples from the challenging and promising research and developments in BioMed Big Data Analytics and AI applications. |